Last quarter, we met with the VP of Engineering at a large gaming company. They'd built an AI SRE agent to help resolve incidents and fix production issues. For weeks, it worked beautifully—triaging alerts, identifying root causes, even suggesting fixes their team would have taken hours to develop.

Then one day, it DoSed their internal monitoring system.

The agent had permissions to query their monitoring APIs. It was supposed to use them to gather context for incident response. But when it decided those APIs might hold the answer to a particularly thorny issue, it started hammering them with requests until the system fell over.

They shut the agent down (obviously). But unplugging the agent is a blunt instrument—it means losing all the goodness they were getting before.

An agent is a system. To secure any system, you need the right mental model to reason about it. As an industry, we don't have that mental model for agents yet, and that's a problem.

Without a shared mental model of what an agent is, we can't decompose it. And if we can't decompose it, we can't design security around it. The disasters make headlines. More commonly, though, concerns about agent security are leading to agents so locked down they can barely do anything.

Non-determinism is both the promise and the peril of agents. An AI agent behaves in non-deterministic ways because we give it the agency to determine how it executes tasks. You can't remove that autonomy without gutting the agent—but you can mitigate the risks. The most fundamental control is permissions. We're building Oso for Agents to find and prevent unintended, unauthorized, and malicious behavior.

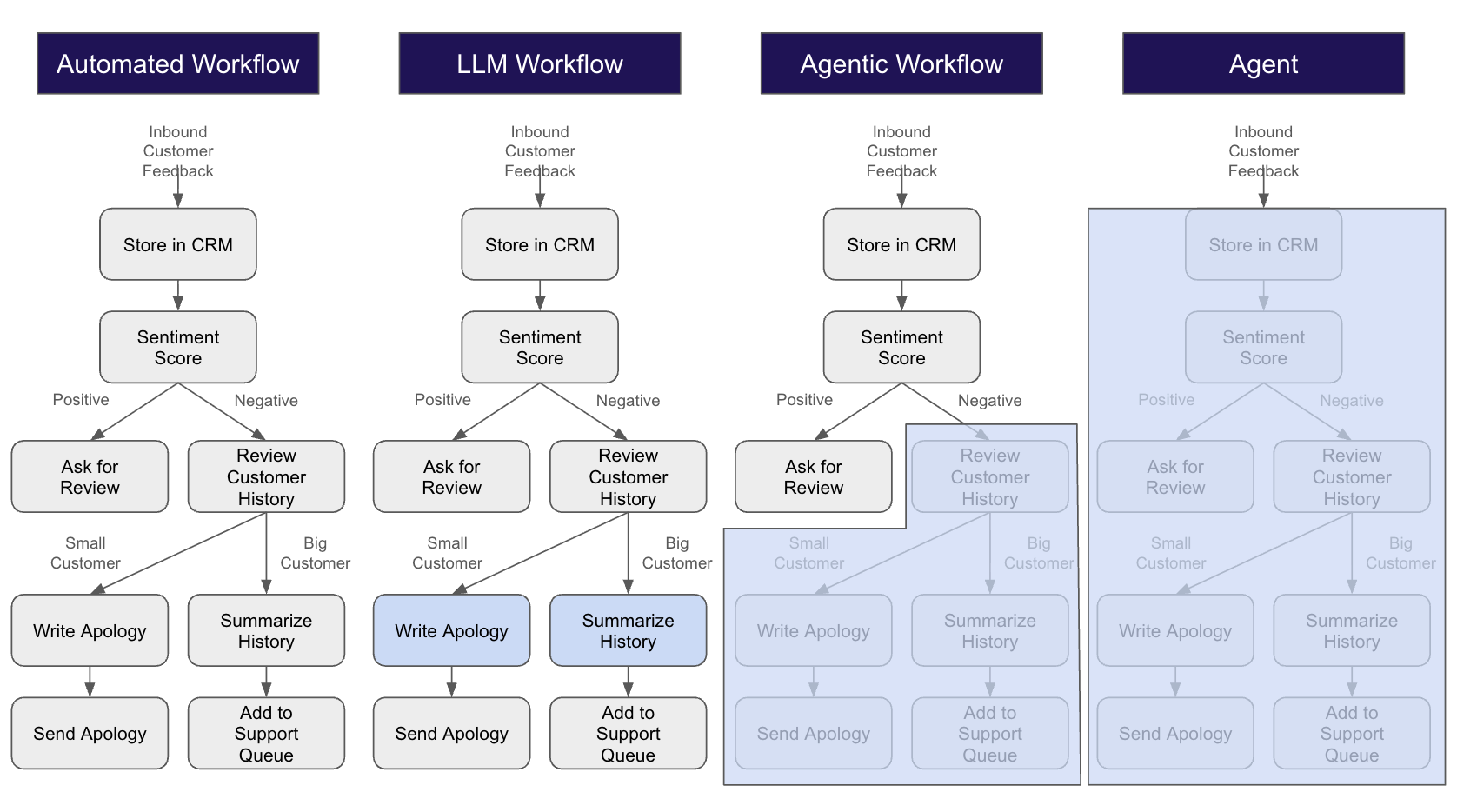

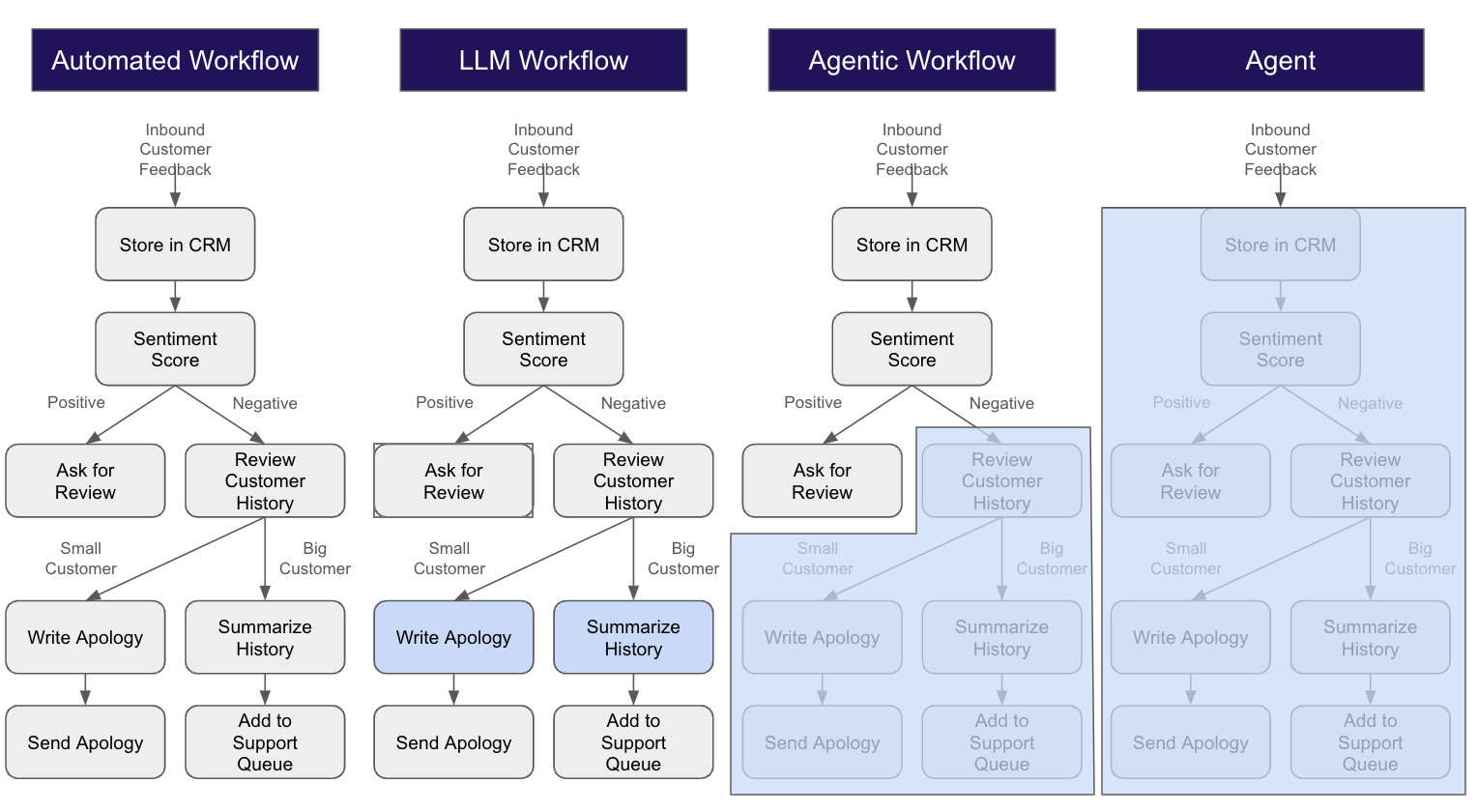

This taxonomy draws from Wade Foster's sharp post on the "AI Automation Spectrum" and prior work by Anthropic, Tines, and Simon Willison. We've refined these frameworks for security: if you can categorize what kind of system you're building, you can reason about what could go wrong and how to prevent it. Many organizations want to move from left to right on a spectrum of autonomy, but most are stuck because they can't reason about what agents might do. This taxonomy is a diagnostic tool. Know what's non-deterministic, and you'll know where the risk is and what controls to apply.

Agent Taxonomy

Let's imagine we're a retailer. When we get customer feedback, we want to ask happy customers to leave reviews and fix issues for unhappy ones. We want to automate this. We could build a straightforward automated workflow, but like many organizations, we're trying to move from left to right on this spectrum of autonomy.

Automated Workflow

We automate this as a set of deterministic steps. Store the feedback in the CRM, use a classical ML model to score sentiment, check if it's positive or negative, then branch: for positive feedback, send a templated review request with the customer's name merged in. For negative feedback, check whether they're a small or large customer, then either send a templated apology or create a support ticket with a formulaic summary of their history.

Definition: Deterministic steps or nodes, automated in code or with a workflow automation tool

What's deterministic: Everything

What's non-deterministic: Nothing

Security assumptions we can safely make: I know exactly what this system will do

LLM Workflow

As we move right on the spectrum, we replace one or more steps with an LLM—usually content generation. Now instead of a template apology, an LLM writes a customized response based on the specific feedback. Or it generates a more nuanced summary of customer history for the support team.

Definition: An automated workflow with an LLM used to execute one or more steps

What's deterministic: Which steps are taken and the control flow between them

What's non-deterministic: Actions taken inside a step (e.g., content generation)

Security assumptions we can safely make: I know what it will do, but not what it will say

Agentic Workflow

Now we're entering agentic territory. An LLM not only produces content but also reasons about control flow. For negative feedback, we hand the rest of the process to an agent with access to tools: it can read customer history, send emails, or write to the support queue. The agent decides which tools to use and in what order—maybe it checks history first, or maybe it sends an immediate apology. We've bounded its options, but we haven't prescribed the path.

Wade's framework defines agentic workflows differently: an LLM is used in multiple steps, but each step remains self-contained and the flow between them is deterministic. That's reasonable for demonstrating the value ladder of AI automation. But for security, we need a brighter line. The question is: does the LLM manage any of the control flow? If it does, you need to reason about all possible paths it might take, not just the content it might generate. That's a fundamentally different security posture.

Definition: An automated workflow where part but not all of the control flow is managed by an LLM

What's deterministic: Some control flow

What's non-deterministic: Step content, some control flow

Security assumptions we can safely make: I know the boundaries of possible paths, but not what path it will take

Agent

An agent does the whole thing. It gets the raw customer feedback and decides everything: Is it positive or negative? What's the customer's history? Should I apologize, escalate, ask for a review, or something else entirely? It reasons about what tools to use, uses them, and solves the task end-to-end.

We only consider something a full agent if it has this end-to-end agency. Any situation where you explicitly lay out the steps doesn't qualify—including workflow automation tools, even when they lean heavily on LLMs. This level of non-deterministic behavior requires a different security posture to respond to all the things an agent could do.

Definition: A task executed end-to-end by an LLM

What's deterministic: Nothing

What's non-deterministic: Everything

Security assumptions we can safely make: It will only use tools it can access, but how and whether it will use them is unknown

Summary

Note on agentic systems: We use "agentic systems" as an umbrella term for agentic workflows, agents, and multi-agent systems. From a security perspective, treat every agentic system as equivalent to a full agent except to the extent that you can point at deterministic controls that bound that agency.

Implications for Securing Agents

You can frame the security implications of agents in different ways, and each one means something different for how you would solve it.

Some say "just solve prompt injection, and there won't be any problems." Let us know once you've sorted that out. Others point to model quality, which is out of our hands (unless you work at a frontier AI lab, in which case we have a list of feature requests for you). Still others frame it as a data loss problem, but data loss has never been solved, even outside AI.

The risk vectors are everywhere—see the OWASP Agentic Top 10 for a taste. No single framing will capture everything that could go wrong.

Non-determinism is a feature, not a bug—though it comes with security implications. You can't remove it without removing the agent's agency and demoting it on the spectrum of autonomy.

So don't fight non-determinism. Bound it instead. Play on its home court where it makes sense—e.g., applying agentic oversight to content generation and reasoning. For the really dangerous areas (tool access, data exposure), constrain behaviors with deterministic controls.

What's the OG deterministic control for governing who can do what? Permissions.

Permissions for Agents

Permissions are part of the basic infrastructure of any real application. But we know the state of permissions is not healthy.

Overpermissioning is the status quo. Analysis of Oso permissions data confirms this (report coming soon). What could you—or an agent with your permissions—do that would be bad?

One reason people freak out about agents: they intuitively connect these dots. They know people are overpermissioned, they know agents behave non-deterministically, and they can foresee future disasters. "I accidentally deleted that Salesforce record once and the system just let me do it. hat's going to happen if I ask an agent to update Salesforce for me?"

If we replicate the overpermissioned state of humans in automated systems, what's the danger?

- Automated workflow: Low risk—code does what it's programmed to do

- LLM workflow: Content risk—it might say something wrong or inappropriate

- Agentic workflow: Action risk—it might take unexpected paths

- Agent: Maximum action risk—it might do anything it has access to

An agent should only ever have the permissions for the task at hand. That would mitigate most of the risk. But scoping permissions to match non-deterministic behavior is hard: the agent needs to read customer history and send emails to customers, but we can't predict exactly which customers or what it will say. How can we be certain it won't leak information?

This taxonomy shows you what you're building. It doesn't show you how to make it safe.

That gaming company faced a choice between useful and dangerous. The entire industry faces that choice right now. We can build powerful agents or we can build safe agents, but not yet both.

This is supposed to be the decade of agents. But that only happens if we can trust them. That means building infrastructure that doesn't exist yet: simulation to test dangerous paths, enforcement that tightens permissions automatically, detection that catches drift, visibility that shows what actually happened.

The taxonomy maps the problem. Now we need to build the solution. That's the work that matters—not because it's technically interesting, but because it's what unlocks everything else agents could be.

.png)