tl;dr—Why is Setting Permissions to AI Agents Complex?

Delegated vs. Application-Wide Access

A Framework for Setting Permissions

Tradeoffs & Operational Considerations

Setting permissions for AI agents is a complex problem.

Why are permissions relevant to AI agents? All AI agents require context to function. That context is typically fetched from a database, a CRM, or another shared source. This creates a problem: external data stores are safeguarded by access rules of who can access what. Without mirroring these same permissions, an AI agent could expose protected data.

For example, a personal payroll AI agent might access a company's payroll data to answer an employee's question about their own salary. If permissions aren't properly mirrored, the agent could potentially expose another employee's compensation details when asked. This risk stems from asymmetrical access—the AI agent has access to everyone’s compensation, but the employee only has access to their own.

This problem grows increasingly complex due to memory. Agents remember things. It’s a necessary feature to improve their accuracy. Developers cannot fix a single permissions blip by adding future safeguards—rather, a system must wipe an agent’s memory if it potentially retained privileged information.

Today, I want to explore how to build the right framework around AI agent permissions, explicitly outlining how to safely mirror access.

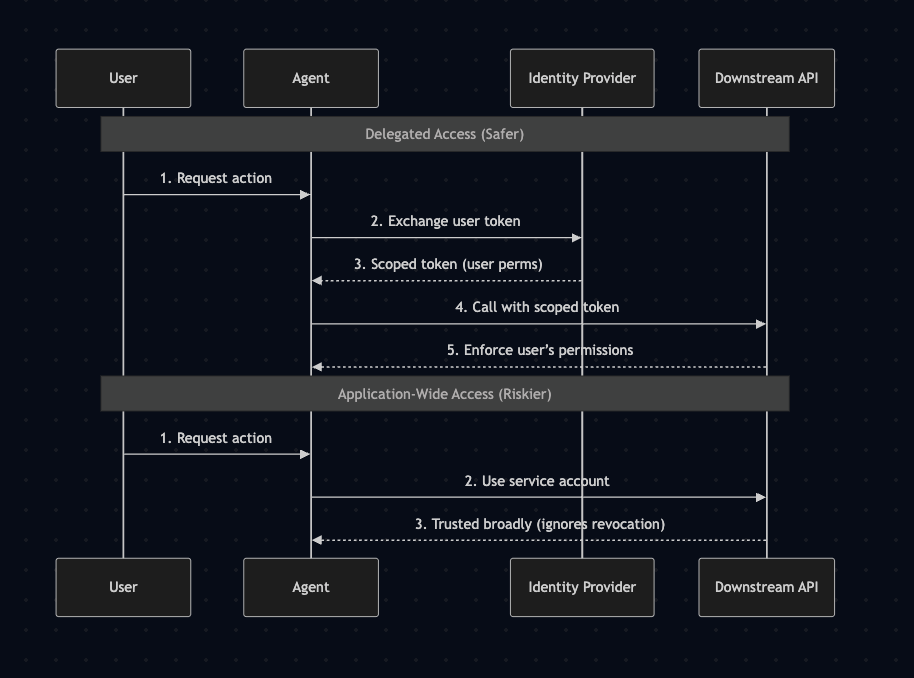

When initially setting up AI agents, many teams default to an application-wide access approach. Teams naively provision an independent service account with universal access to enable AI agents to access anything. It’s easy to set up and takes out the fuss. But it’s in violation of the principle of least privilege—if every request runs with the same, all-access identity, then interface with the AI agent could expose protected information.

Instead, users should invoke AI agents with delegated access. With delegated access, the AI agent inherits a human’s identity when they are provisioned. This boils down to a few steps:

These permissions remain real-time; if the user loses access to a record or a file, so does the agent.

Code for the diagram above (in Mermaid Diagrams)

equenceDiagram

participant User

participant Agent

participant IdP as Identity Provider

participant API as Downstream API

Note over User,API: Delegated Access (Safer)

User->>Agent: 1. Request action

Agent->>IdP: 2. Exchange user token

IdP-->>Agent: 3. Scoped token (user perms)

Agent->>API: 4. Call with scoped token

API-->>Agent: 5. Enforce user’s permissions

Note over User,API: Application-Wide Access (Riskier)

User->>Agent: 1. Request action

Agent->>API: 2. Use service account

API-->>Agent: 3. Trusted broadly (ignores revocation)

In many cases, it makes sense to constrain the agent’s access even further. For example, if an employee has access to Salesforce and a revenue dashboard, an agent only needs the former, and not the latter, to draft a sales cold email. Following the principle of least privilege, you should trim access to only what is necessary. In the following section, I will discuss explicit strategies for achieving the principle of least privilege.

Implementing static mirroring for AI agents is a minimum security requirement. However, good security also incorporates an AI agent’s intended behavior and context. At the same time, developers cannot constrain access so that it breaks day-to-day workflows.

The biggest failure mode in AI systems is standing access. A service account with a long-lived token could stay valid long after a user has left or roles have changed. An agent that holds one of those tokens will keep answering requests even when the system has revoked its caller.

A better approach is giving access to agents at runtime. An agent requests a credential each time it’s invoked; after the task, the system discards the credential. On the following request, the agent returns again to the identity provider, getting fresh permissions from the user.

This approach is known as just-in-time (JIT) access. You can implement just-in-time access by using an authorization provider like Oso.

Human-in-the-loop is an authorization strategy where a human manually grants access to an AI agent before it proceeds with a sensitive action. Human-in-the-loop works best for sensitive or potentially destructive actions—such as accessing healthcare data or deleting records—where a human double-checks if an agent should execute that action.

The human in the human-in-the-loop could be the original user that invoked the AI agent. Their role is to double-check that the AI agent’s chosen action is correct. To be clear, this doesn’t force the human to approve every single action—the benefit (and risk) of AI agents is that they can execute thousands of actions in minutes. Rather, they just need to grant access to the type of risky transaction being done—e.g. mutate, delete etc. It’s similar to how users are forewarned if they want to overwrite a file; after they grant permission the first time, the program can safely continue overwriting other files.

Not every environment is equal. A managed laptop inside a corporate network has less of a risk profile than an unmanaged smartphone using public Wi-Fi. Of course, an AI agent is likely running in the cloud and not from a coffee shop’s Wi-Fi on an iPhone 12; however, developers should incorporate context-aware restrictions into any company’s security policy whenever agents execute from devices outside of their corporate private network.

To enforce this, developers should check an agent’s permissions against device trust, geolocation, and even time of day—all of which might indicate that the agent operates outside a preferred boundary.

Sometimes, an agent might need broader access for a limited time during a future event. For example, an agent might need access to QuickBooks data for a yearly compliance audit or to DevOps data for a quarterly incident review.

With an access management framework, developers can time these elevated permissions around events, revoking access after the period passes.

Good security assumes flaws; for example, a user might be over-permissioned, and consequently, their AI agent might also be over-permissioned. To account for this, developers should incorporate behavior as an authorization signal (especially for agents tackling sensitive actions).

For example, if an agent suddenly starts bulk downloading data or issuing requests at 3AM from an unregistered location, a system should automatically restrict their access without waiting for a human to notice. Good guardrails trigger token revocation without human-in-the-loop approval.

A good practice is to proactively wire these guardrails into existing monitoring stacks so they trigger alerts when needed.

There are two main tradeoffs that come with keeping agents safe through permission inheritance. Both matter, but teams should consider what works best for them as they scale.

I’ll first outline the risks. Infamously, an AI coding agent used at Replit deleted an entire production database. If the AI agent had more constrained access, such a destructive action wouldn’t have been possible. Multiple things could’ve averted this, including human-in-the-loop checks and limited write (delete) access.

All authorization checks add latency; however, some add more latency than others. The fastest authorization system is one using standard tokens with cached credentials. However, caching introduces the possibility of a stale state. To safeguard against this, developers could configure AI agents to freshly check credentials.

Context-aware policies, meanwhile, are a little slower as they’ll need to fetch additional metadata before submitting the request—such as IP lookups.

This creates a choice: should a business cache aggressively and forgo context-aware policies in favor of ultra-fast authorization? Or should they prioritize the strictest permissions while accepting higher latency. There are businesses that belong on either side of this spectrum as well as businesses that belong somewhere in the middle. For example, a sales CRM might prefer to cache permissions; access changes rarely and fast retrieval of records is important. Conversely, a banking application might prefer the latter option, where even the tiniest lagged permission could lead to financial repercussions (e.g. someone being rightfully expelled from a bank account but still temporarily retaining access).

Without the right architecture, teams struggle to manage complex permissions. For just-in-time and context-aware access to work, teams need to coordinate a set of inputs (device, location, behavior, etc) and create an access endpoint to grant tokens. Developers must also write policies and ensure the system consistently abides by them during authorization.

For most companies, using an authorization-specific platform like Oso or a rules engine like OPA is preferred over building an in-house system. Delegated tokens, exchange flows, and continuous validation make authorization complex. Teams typically get the best results by trusting a third-party framework.

The key takeaway is that good permission mirroring is systemic. It isn’t enough for an agent to respect user scope in one system but bypass it in another. CRMs, file repos, internal APIs, and cloud providers all handle permissions differently, and that messy landscape is what makes enforcing “least privilege” for agents so difficult to maintain at scale.

That is where dedicated authorization layers come in. Tools like Oso pull policies into a central control plane. Developers define rules once and enforce them everywhere. For AI systems, this ensures that agents never hold more authority than the users they represent.

If you want to learn more, check out the LLM authorization chapter in our Authorization Academy.

It means the agent only has the same permissions as the user who invoked it.

Without it, agents can bypass access controls and return data that users shouldn’t see.

They often have broad, long-lived access that ignores user roles and revocations.

Yes. Least privilege means trimming rights to what the agent actually needs.

Short-lived credentials issued at runtime, revoked immediately after use.