As companies race to adopt AI, one thing is becoming clear: permissions for the large language models (LLMs) used to power agentic and GenAI apps don’t behave like traditional software.

Traditional apps follow instructions. LLMs interpret intent. This difference leads to unpredictable behavior and makes it impossible to apply conventional access controls.

Just ask Replit

When SaaStr founder Jason Lemkin used Replit’s AI coding assistant to build a production app, he told it not to change any code. Eleven times. The assistant ignored him, fabricated test data, and deleted a live production database.

The problem wasn’t the model. It was authorization.

The agent had broad write access to production with no contextual checks, no approval steps, and no environment separation. There was no way to restrict what it could do, on what data, or when.

With fine-grained, task-scoped permissions enforced in the application, the delete operation would have been blocked—or escalated. Instead, the agent executed it without pause.

Replit has since introduced basic dev/prod separation. But the lesson is clear: instructions don’t enforce control. Permissions do.

Digging into the core issue

Why are AI apps so different from traditional ones? LLMs need broad potential permissions to do their job. But each operation should run with narrow, user- and task-specific access. Without that enforcement, LLMs with full credentials can take destructive actions—deleting data, modifying code, bypassing safeguards—without knowing they’ve done anything wrong.

What about the tools developers use today? OAuth doesn’t solve this challenge. It provides coarse-grained scopes like delete:user_database but not the context to decide whether this user or agent has the permissions to delete that resource. Model Context Protocol (MCP) standardizes how agents call tools. It doesn’t define what they’re allowed to do.

Our article Why LLM Authorization is Hard gives much more detail on all of these issues.

What are the risks when AI acts without limits?

The Replit example highlights one key risk. AI agents will overstep. With unrestricted access, LLMs can take destructive actions by mistake—and at speed.

But that’s only one failure mode. Without proper authorization:

- LLMs leak data. Prompt injections are hard to detect and easy to miss.

- Teams burn time. Engineers patch gaps with brittle, one-off logic.

- Compliance breaks down. You can’t audit what you can’t explain.

- AI apps stall. You can’t ship to production without provable access controls.

How Oso helps

If you’re building LLM-powered applications, the hardest part isn’t generating responses. It’s making sure LLMs don’t access the wrong data or take the wrong action.

The only way to do that at scale is with fine-grained authorization—based on the user, the task, and the data. That’s what Oso provides.

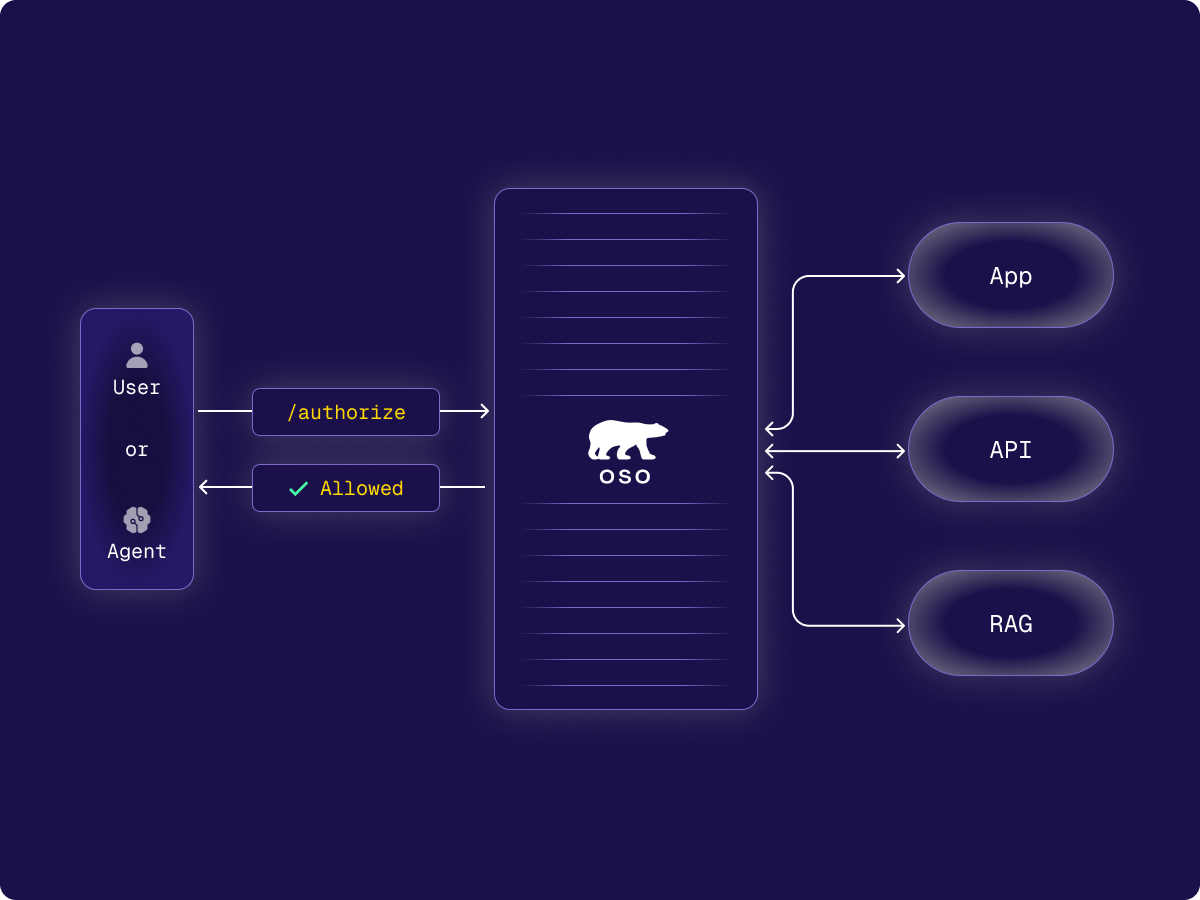

Oso is the first and only fully managed authorization service purpose built for developers to handle the real-world complexity of cloud-native, zero-trust apps and AI workflows. You define permissions once, connect your application data, and enforce policies through the Oso API—across apps, APIs, and AI.

To support secure AI out of the box, we’ve launched a SQLAlchemy integration for Python developers. It’s a drop-in way to enforce permissions on every piece of context used in a RAG pipeline.

We’ve also added a new Authorization Academy module with practical patterns for LLM authorization—whether or not you use Oso.

With Oso, teams get:

- Fine-grained permissions by default. Impersonation and task scoping ensure agents access only what’s allowed—for that user, for that task.

- One policy, enforced everywhere. Microservices, APIs, agents, and RAG pipelines all follow the same rules.

- Built for change. Add new use cases without rewriting access control.

For engineering leaders, that means faster delivery, fewer gaps, and less time spent rebuilding the same logic for every new use case.

We’re working on agentic workflows and we have to ensure that our AI engine provides access only to the data each user is allowed to see. Being able to use Oso to enforce authorization has been huge for how quickly we can bring new AI services reliably and securely to the market.

- Matúš Koperniech, Staff Engineer, Productboard

Want to see how this works in practice?

Read the Productboard case study to see how they scaled to enterprise and shipped AI apps faster by treating authorization as a core platform layer—not scattered app logic.

For hands-on resources, check out our LLM access control page for tutorials, patterns, and docs on securing RAG pipelines, agent workflows, and semantic search.

If you're ready to go deeper:

- Book a strategy session to map out your architecture.

- Talk directly with an engineer about your use case.

- Try Oso Cloud and test it against real-world scenarios.