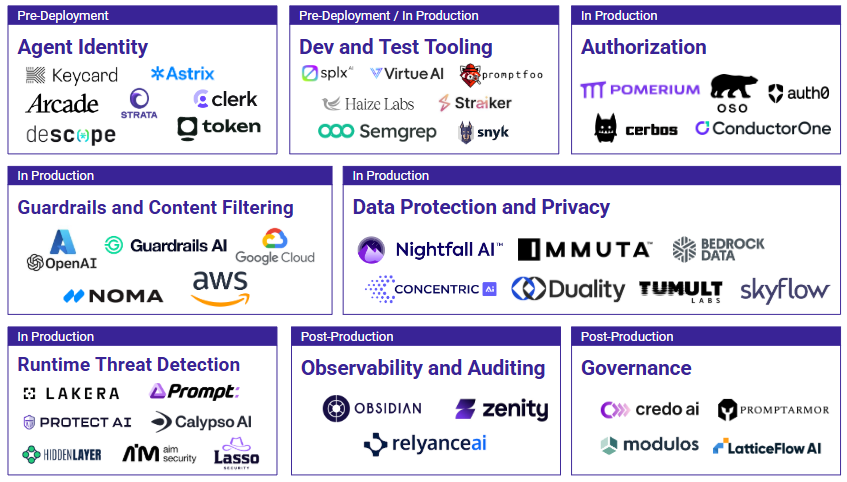

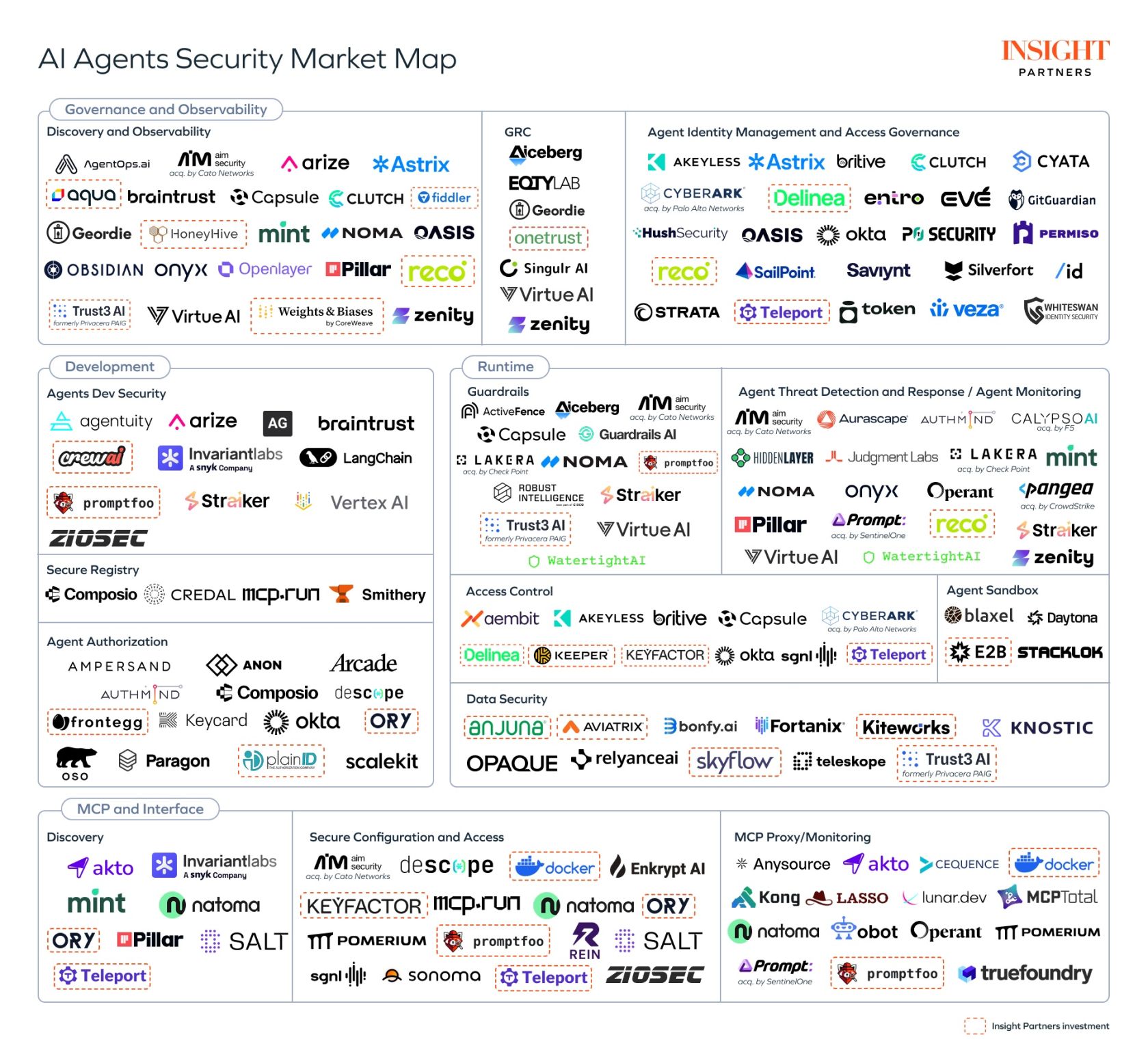

We’re honored to be included in two reports published today: the Agent Security Market Landscape from Ansa and Securing the autonomous future: Trust, safety, and reliability of agentic AI from Insight Partners. We’ve been spending a lot of our engineering time on agentic authorization problems.

Authorization for agents is complex. Often, an agent is acting on behalf of a human, so it would seem natural to inherit that person’s permissions. We know, however, that humans are routinely over-permissioned. An employee might have global edit access to Salesforce, with the rationale that a) they’ll know better than to edit records they don’t own, and b) the potential damage they could cause is limited by wall clock time — they work during working hours, and there’s only so fast they can click through screens. An LLM agent with access to Salesforce, however, doesn’t know any better and can access data orders of magnitude faster than a human. In the case of autonomous agents — those that aren’t acting on behalf of a human — how do you define permissions scopes appropriately from first principles?

Our recommended best practice for LLM authorization is to constrain permissions to the intersection of what the agent is capable of doing, the active human user’s permissions, and the required permissions for the task at hand. That goes a long way toward reducing over-permissioning, though enforcing least privilege for agents dynamically is difficult — more on that later. Taking this approach means that agent identity alone is never enough; as the Insight Partners authors write, “an authorization layer is still required to define and enforce what each agent is permitted to do once authenticated.”

It bears saying that agentic permissions can’t be handled in prompting. There are certainly guardrails you can put in system prompts, sanitization you can perform on user prompts, and more. Those serve a purpose in other parts of the agent security landscape — for example, controlling agent voice and tone, or checking for PII in inputs. But prompts are interpreted, not enforced. The only way to be confident that an agent won’t access data or take actions inappropriately is to deny access to do so. That needs to be handled in a deterministic authorization service like Oso.

Ansa’s market landscape encourages readers to “have a strong sense of the product roadmap of prospective vendors and ensure they align with where the market is heading.” To that end, let’s spend some time on what’s coming next.

First, we continue to invest in the core Oso platform that powers authorization at customers like Duolingo, Productboard, Oyster, and more. The same engine handles authorization for agents and humans in applications, RAG workflows, and all other uses. Enhancements we make here will improve agentic authorization. For example, reliability and performance are critical for all customers, but performance has compounding effects with agents: when a single prompt might kick off multiple tool calls, the latency of each interaction stacks up while a human is waiting on results.

Second, we’re not confining Oso to the Authorization categories as currently defined in these reports. Because agents dynamically adapt execution and data flows to meet their objectives, authorization needs to dynamically adapt at runtime. It’s not enough to just enforce permissions at agent invocation. Rather, your authorization system needs to continuously observe agent and tool execution, dynamically applying control when needed and logging every decision for downstream auditing.To address this need, we’re building Automated Least Privilege for Agents. We’re developing a way to keep agents on a tight leash, with ongoing monitoring and risk categorization of agentic data access and actions, alerting for anomalous agent behaviours, automated response recommendations with single click application of changes, and auditing of authorization decisions for agent actions. If you’re interested in joining the beta for Automated Least Privilege for Agents, get in touch.

Third, we’re looking to the future. Today, we’re applying authorization to agents. What about applying agents to authorization? Let me be clear that Oso is not doing this today: agents don’t yet act reliably enough to control a critical component like authorization, so deterministic rules are needed. But we also know that the way organizations write their authorization logic today is flawed: humans make mistakes, users are routinely overpermissioned, and permissions are rarely updated proactively. As AI agents improve, we might reach a point where an agent-controlled authorization scheme would be better. Consider the argument for self-driving cars: yes, they’ll still get in accidents sometimes, but if they are overwhelmingly safer than human drivers, they’re a positive. Similarly, if we reach a point where an agent can assign privileges correctly 99.9% of the time, that would be a significant improvement on the current human-led approach. Oso is researching this application of AI agents, with our customers’ security as our primary concern.

We agree with Ansa and Insight Partners that agentic security is a moving target rather than a solved problem. A few years ago, the general public hadn’t heard about LLMs; MCP was announced less than one year ago and has quickly become the primary way that people expose tools to agents. Because we engineered from the outset for flexibility by supporting any authorization model — RBAC, ABAC, ReBAC, or your own custom mix — we can easily adapt as the market evolves. Neither Oso nor our customers are locked into a rigid authorization model that can't respond to new requirements of intelligent and autonomous software. We’re excited to keep building for the future.

Want to learn more about how to control permissions for agents? Chat with an Oso Engineer.

.png)